Artificial Intelligence

Fake Case Law, Real Consequences: AI Misuse Shakes Up South African Courtrooms

When AI ‘hallucinates’, justice hangs in the balance.

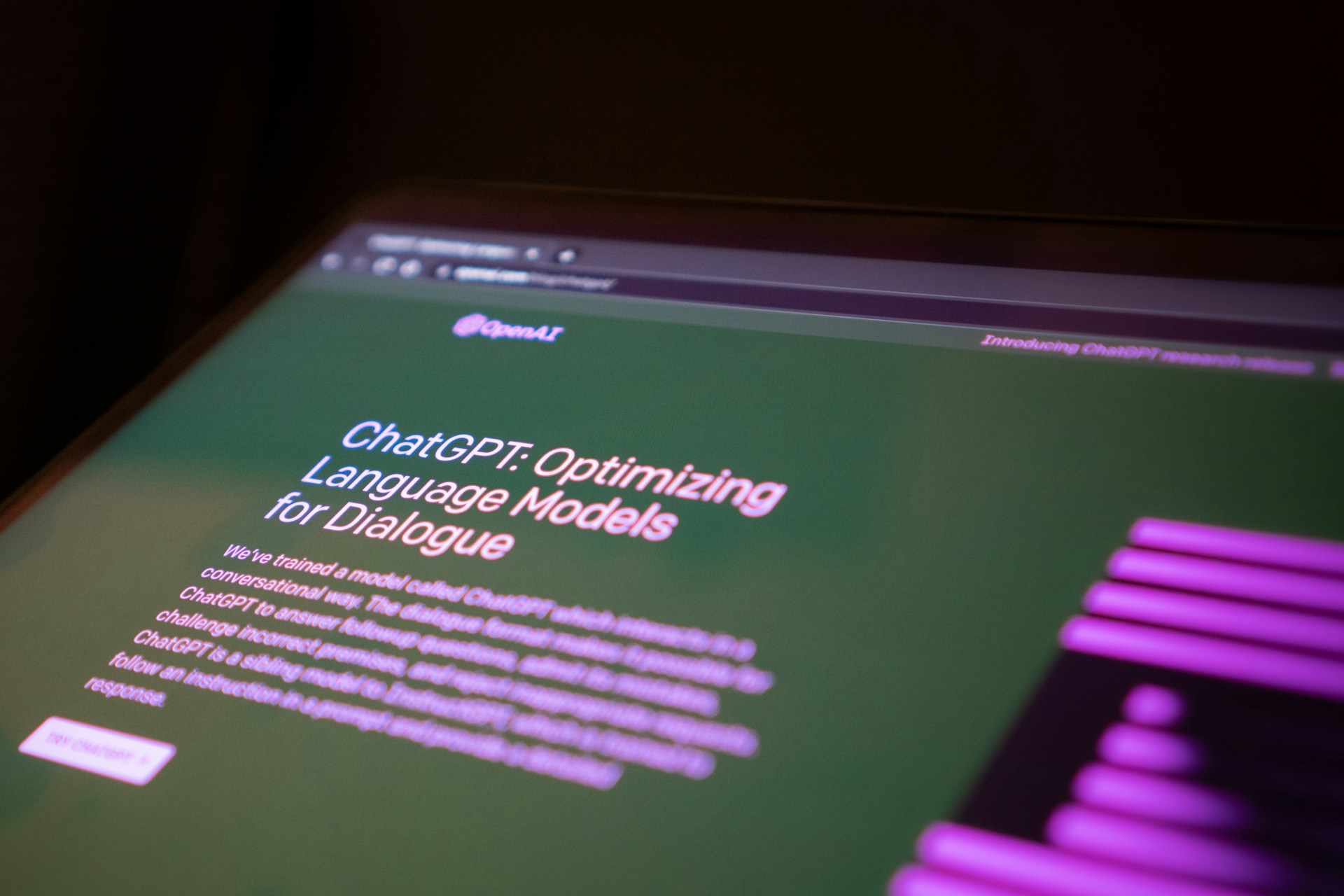

The promise of artificial intelligence has long captured the imagination of the legal profession — faster research, fewer hours poring over precedents, more time for courtroom strategy. But what happens when that promise becomes a risk?

South Africa is now grappling with that very question, as the Legal Practice Council (LPC) takes urgent steps to curb the misuse of generative AI in legal proceedings. At the heart of the issue are two court cases — Mavundlela vs the KwaZulu-Natal MEC of Cooperative Governance & Traditional Affairs and Northbound Processing vs the SA Diamond and Precious Metals Regulator — where AI-generated research included fake case law cited as precedent.

A Dangerous Misstep in the Justice System

In both matters, legal professionals submitted court documents referencing judgments that didn’t exist. These so-called “hallucinations” — a known flaw of large language models — are not just embarrassing errors. They’re seen by the LPC as serious ethical breaches that could derail the criminal justice system and, in extreme cases, even result in disbarment.

“It’s not about whether the misleading info was added by mistake,” said LPC deputy chair Llewellen Curlewis. “Whether intentional or not, it puts the credibility of our courts at risk.”

South Africa’s legal system relies heavily on the doctrine of precedent. Citing false rulings doesn’t just weaken arguments — it threatens the consistency and stability of the entire legal framework. “If unverified AI outputs start influencing judgments, the consequences could be devastating,” added Curlewis.

A Policy in Progress

The LPC is now working on a national policy that will set clear boundaries for how legal practitioners can use AI. It won’t be a simple checklist. The Council wants to consult IT experts, legal ethicists, and even university professors who’ve already been wrestling with the same issue in academic settings.

“This is not a legal sector issue alone,” Curlewis said. “Academics have been dealing with students using AI to generate work. We’re looking to them for guidance on how to manage this responsibly.”

The idea is not to ban AI tools outright, but to build a framework where any AI-assisted research must be fully verified before it’s submitted in court. For now, the Council’s message is clear: if you’re going to use AI, you need to fact-check everything — no exceptions.

A National Wake-Up Call

Social media reaction has been swift. Some South Africans expressed shock that AI-generated nonsense could make it into official court documents. “If lawyers can be fooled by a robot, what chance do the rest of us have?” asked one Reddit user. Others pointed out that while AI is a useful tool, it should never replace human diligence.

Legal tech experts are split. Some argue that over-regulating AI could stifle innovation and productivity. Others say the legal system, unlike other industries, cannot afford mistakes — not when people’s rights, freedom, or lives are on the line.

The Bigger Picture: Ethics in the AI Era

This isn’t just a South African problem. Globally, courts and law firms are trying to figure out how to embrace AI’s efficiency without sacrificing ethical standards. But South Africa’s swift response may set a precedent of its own — one where legal integrity trumps digital convenience.

AI can assist, but it cannot think, reason, or bear responsibility. That still falls squarely on human shoulders. As the LPC sharpens its policy pencil, the message is simple: technology must serve justice, not the other way around.

Source:Tech Central

Follow Joburg ETC on Facebook, Twitter , TikTok and Instagram

For more News in Johannesburg, visit joburgetc.com