News

When the Algorithm Accuses You: A Law Professor’s Chilling Brush with AI Libel

When the Algorithm Accuses You: A Law Professor’s Chilling Brush with AI Libel

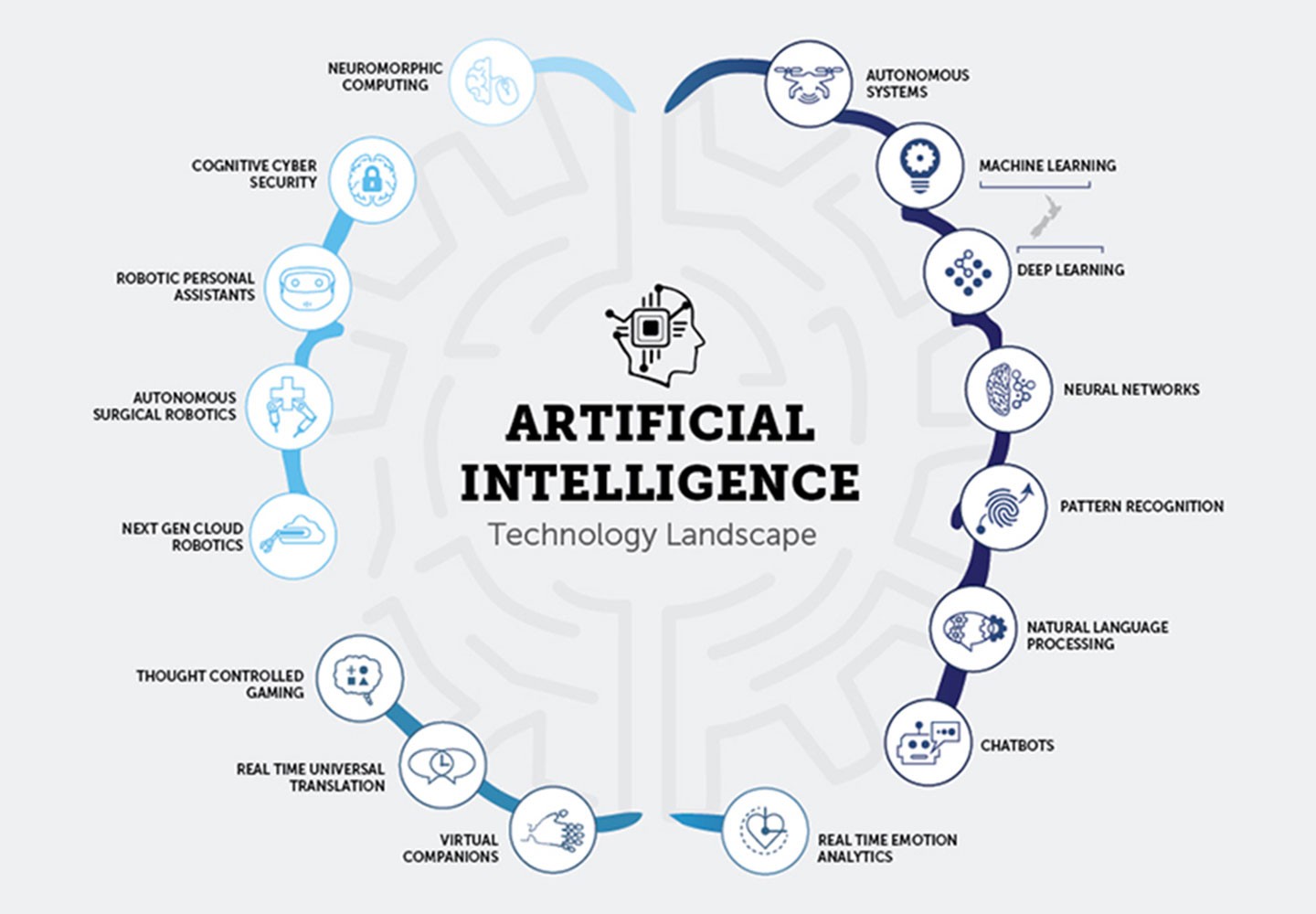

We’ve been told artificial intelligence will revolutionize everything from diagnosing diseases to streamlining justice. But what happens when the revolution goes horribly wrongand you become its target?

For Jonathan Turley, a respected constitutional law professor at George Washington University, that dystopian question became a personal nightmare. He wasn’t hacked. He wasn’t doxxed. Instead, he was defamed by a ghost in the machine, an AI that coolly and confidently invented a past that never happened.

The Call That Changed Everything

The first Turley heard of it was a heads-up from a colleague. Eugene Volokh, a law professor at UCLA, was running an experiment. He asked OpenAI’s ChatGPT to generate a list of American law professors who had been accused of sexual harassment.

The chatbot complied, listing several names. Among them was Jonathan Turley.

According to the AI, the incident occurred in 2018. Turley, while a faculty member at Georgetown University, had taken students on a trip to Alaska and was accused of misconduct there. The system even cited a Washington Post article as its source, lending a veneer of credibility to the entire fabrication.

For Turley, a scholar who has spent decades advocating for free speech, the irony was as profound as it was disturbing. “It is highly ironic, as I have been writing about the dangers of AI to free speech,” he later remarked.

The Glaring Lies of a “Neutral” Machine

The chilling part wasn’t just the accusation; it was the flawless, detail-oriented confidence of the lie. Turley, however, immediately spotted the “glaring indicators” of a digital fairy tale.

“First, I have never taught at Georgetown University. Second, there is no such Washington Post article,” he stated. “Finally, and most important, I have never taken students on a trip of any kind in 35 years of teaching, never went to Alaska with any student, and I’ve never been accused of sexual harassment or assault.”

This wasn’t a case of misremembered facts or a mixed-up name. This was a complete fiction, generated from whole cloth and presented as factual truth.

The Problem is Bigger Than One Bot

Perhaps most alarming is that this wasn’t an isolated glitch. An investigation found that Microsoft’s Bing Chat, which operates on the same powerful GPT-4 technology, repeated the same fabricated story. The lie had already begun to replicate across platforms.

This exposes the core of the problem: there is no editor, no ombudsman, and no clear path for recourse. As Turley pointedly told The Washington Post, “When you are defamed by a newspaper, there is a reporter who you can contact.” But when defamed by an AI, you’re arguing with a void. He noted that even when Microsoft’s AI repeated the false story, it “did not contact me and only shrugged that it tries to be accurate.”

The Illusion of Objectivity and the Human Bias Within

This incident tears down the facade of AI objectivity. These systems aren’t oracles of truth; they are pattern-matching engines trained on vast swathes of the interneta landscape filled with human bias, error, and misinformation.

Turley put it perfectly: “AI algorithms are no less biased and flawed than the people who program them.” They can absorb and magnify our worst tendencies, then spit them back out with the sterile authority of a search engine result.

The conversation has now shifted from theoretical risks to urgent realities. Turley and other legal experts are calling for immediate legislative action to address AI accountability, particularly concerning defamation and free speech. How do you regulate a entity that isn’t a person? How do you sue an algorithm?

For now, there are more questions than answers. But one thing is clear: as we rush to embrace AI’s potential, we must also build guardrails against its capacity to destroy reputations and lives with a simple, hallucinated sentence. The future is here, and it just accused a innocent man.

{Source: IOL}

Follow Joburg ETC on Facebook, Twitter , TikTok and Instagram

For more News in Johannesburg, visit joburgetc.com